AtScale had the opportunity to collaborate with Andrew Brust, Founder & CEO of Blue Badge Insights, to explore the history of the semantic layer concept. This post is a summary of his perspective published in a recent whitepaper, The Semantic Layer: Why It’s Critical to Analytics Success and Always Has Been. Check out the full piece here.

The term “semantic layer” has been around for awhile, but it’s recently seen a surge in popularity within data and analytics circles. As companies adapt to the modern world of cloud analytics, they’re looking for ways to effectively harness large volumes of data to make business decisions. And the semantic layer can help them to do just that.

While the semantic layer may seem like a new solution, it actually addresses a persistent challenge: giving individual business domains control over data without leading to chaos. By using a hub-and-spoke analytics model, the semantic layer can ensure performance and consistency while enabling autonomy at the same time.

In this post, we’ll discuss the need for semantic models and a semantic layer, including what led to their development and how they enable actionable insights at scale.

From OLAP Systems and Data Lakes to Cloud Data Warehouses

In the early days of analytics and business intelligence, most data was modeled within a specialized backend database called an Online Analytical Processing (OLAP) system. OLAP systems understood the data model and anticipated the types of queries to expect. This ensures that they can pre-calculate data aggregates into cubes to improve query performance.

The OLAP approach worked for small domains of analysis and small volumes of data, but it was too expensive and complex for the Big Data era. This limited companies to only answering the “known unknowns” or predefined questions because the data was pre-modeled, so they were never able to become a fully data-driven organization.

To overcome the limits of the traditional OLAP approach, many organizations shifted to large-scale monolithic data lakes like Hadoop. Data lakes enabled companies to store and access large quantities of raw data to uncover new patterns and answer “unknown unknowns” questions. However, the data still needed to be modeled during each analysis, which slowed time to insight and created new inefficiencies.

Since unstructured data lakes didn’t fully meet the needs of data analytics, many organizations looked to data architectures of the past. Data warehouses, though they pre-dated data lakes, have made a comeback in the cloud with solutions like Snowflake and BigQuery. But take heed: these cloud warehouse solutions still lack the data modeling capabilities necessary to adequately perform routine analysis, drill down analysis, and more.

The Need for Semantic Modeling

Though pre-defined models with traditional OLAP or data warehouses, and the “last minute” models with data lakes and data lakehouses, each have their benefits, we can’t ignore the missing piece: semantics. Semantics – or the context and meaning around data, is critically important for making data analysis ready.

When you pre-define these models – as was done in the OLAP era – business users in specific domains can very easily and quickly perform analysis on the data. In turn, this helps business users get comfortable with using data and helps encourage adoption throughout the organization, ultimately leading to a data-driven culture.

It’s important to note that having a model for routine “known unknown” analysis doesn’t prevent exploratory “unknown unknowns” analysis. Instead, the ability to perform both types of analysis from the same data is a powerful way to bridge the gap between business intelligence and data science teams.

Delivering Actionable Insights at Scale

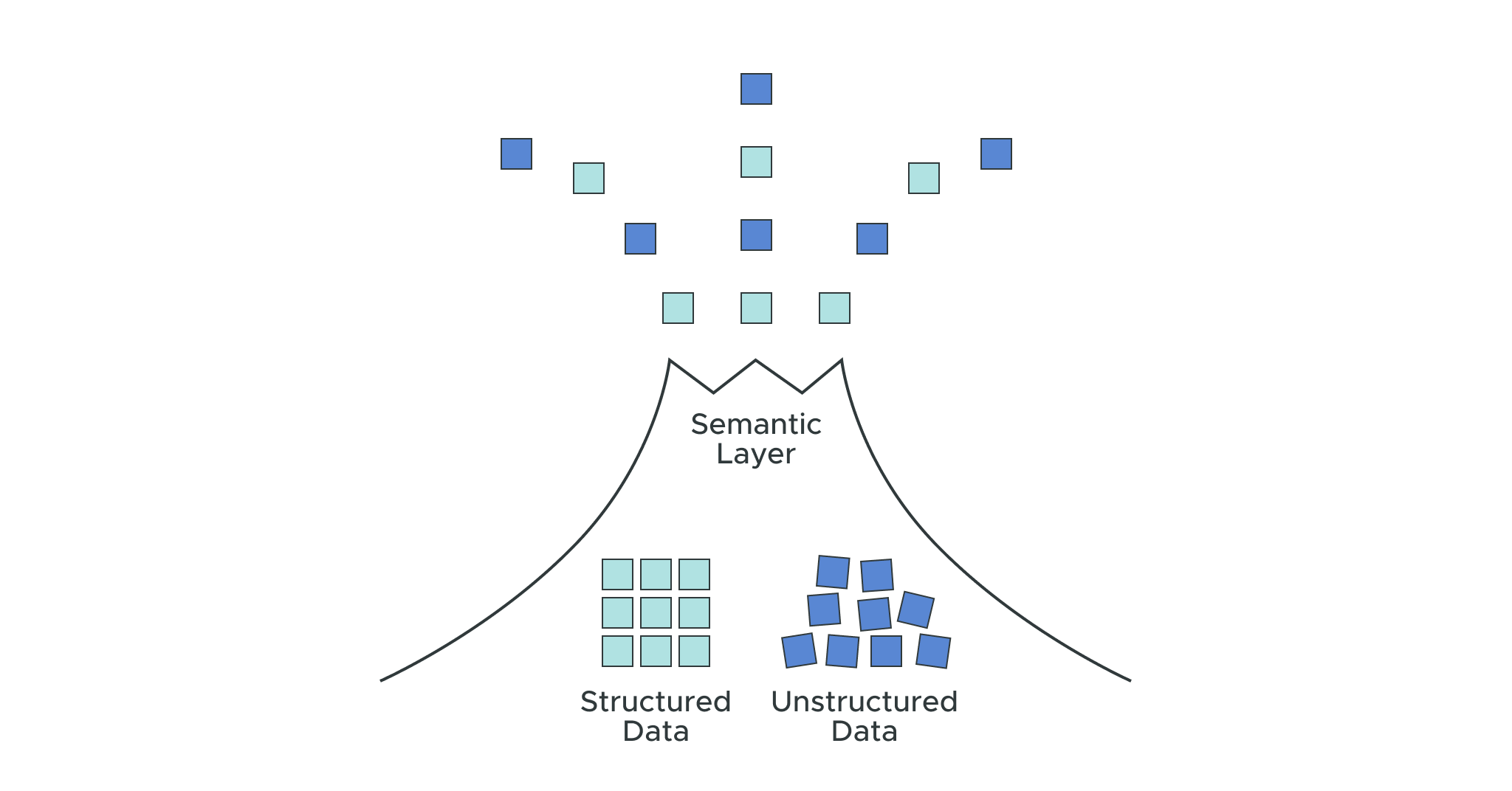

The semantic layer provides a centralized model for business intelligence and data science teams. It turns a vast array of largely undocumented and undiscoverable datasets into a contextualized and accessible data model.

More specifically, the semantic layer is organized into metrics, dimensions, and hierarchies that make sense in a business context. This ensures the scalability and performance of modern data lakes while also providing the traditional capabilities from the OLAP era necessary for business domain-specific analytics.

Since a semantic layer enables domain-specific semantic models, there is enough autonomy for business teams to create data definitions that suit their needs. At the same time, the semantic layer creates a common denominator for business domains, ensuring consistency without compromising autonomy. This means organizations can effectively shift to a hub-and-spoke model, where the semantic layer itself is the “hub” and the “spokes” are the individual semantic models at the business-domain level.

In short, enterprises that want to deliver actionable insights at scale need to consider a semantic layer. This is the best way to turn data into a product that’s accessible to business users, effectively democratizing data throughout the organization.

Read the full whitepaper to learn more about using a semantic layer with a hub-and-spoke analytics approach: The Semantic Layer: Why It’s Critical to Analytics Success and Always Has Been.

SHARE

Case Study: Vodafone Portugal Modernizes Data Analytics