The modern data-driven organization creates, captures, and manages a massive amount of data from many sources. These data assets hold massive potential to generate business value: reducing costs, gaining operational efficiencies, finding new sources of revenue, and outperforming competition. Realizing this potential depends on transforming raw data assets into something that business domain experts can work with. The concept of a data mesh relies on the ability to efficiently manage this transformation so that disparate work groups can interact with data and build data products that deliver business value. Business-ready data is exactly what it sounds like: data assets that are ready for direct interaction with business domain experts.

Embracing a data mesh approach lets organizations decentralize data product creation to support intelligent decision-making across various business units (check out part 1 of our data mesh blog series to learn more). But, many make the mistake of supporting their data mesh with complex and loosely governed data pipelines, rather than a holistic strategy for delivering business-ready data to work groups. The rise of cloud data platforms and availability of open source ELT tools like dbt and Apache Airflow empowers data engineers to build and maintain pipelines to deliver subsets of data to workgroups. On the surface, this rise in open source tools seems like it would support data mesh. But, this proliferation of pipelines in practice is complex to maintain, impossible to govern, and constrained by the availability of data engineering resources. To achieve true data mesh success and put the power of creating relevant data products into the hands of your data users, your organization needs a scalable approach to delivering business-ready data.

What is Business-Ready Data?

We can think of “business-ready data” as the last mile of data transformation before it’s put in the hands of data consumers. Data engineering pipelines transform raw operational data — which is structured to support application logic and transaction capture — into a format that’s more amenable for using query logic to ask questions of the data. This transformed data is often referred to as analytics-ready. While it’s possible to let business analysts, data scientists, and SQL-savvy business managers interact with this analytics-ready data, to do so accurately requires sophisticated data skills, understanding of the business, and the ability to map data concepts to business significance. Interacting with analytics-ready data is not easy and can be easily misinterpreted.Business-ready data, by contrast, is much easier for every business department to use. It removes ambiguity of how to represent important business concepts, and encourages exploration by business domain experts.

The Rise of Analytics Data Pipelines

The proliferation of data pipelines that support analytics programs coincided with the shift from ETL (Extract-Transform-Load),to ELT (Extract-Load-Transform). ETL and ELT both perform the same function: transforming operational data that’s intended for application logic into a structure that’s intended to support analytics use cases including business intelligence, exploratory data analysis, and data sciences. They simply perform this function in two different orders. This switch from ETL to ELT happened because of the shift from on-premise to cloud data storage. Today’s flexible cloud environments make it possible for data to get transformed more easily, with less coordination with infrastructure teams. Data engineers are able to script and automate transformations with tools like dbt or Airflow, then orchestrate and automate transformations directly in their Snowflake, Databricks, or BigQuery environment.

This capability has created a new generation of data engineers who can support business requests for data sets (or data views), customized for domain specific use. As the business identifies the need or opportunity for a new data product (e.g. report, dashboard, exploratory analysis, AI/ML model, etc.), they request supporting data from their data engineers. Whether these data engineers are embedded within business units or operate from a centrally-managed team, they do their best to translate business requests into transforms that deliver a materialized view of the transformed data. Ensuring that the data is presented in a form that analytics consumers can work with, using their preferred BI or AI/ML tool, is the job of the data engineer . As data consumers articulate new data requirements, data engineers modify existing or create new pipelines delivering new views of data.

The most common type of transformations implemented by data engineers to bring raw data to the point it can be more easily analyzed include:

- De-normalizing tables

- Filtering extraneous data to make the size of data assets more useable

- Establishing an appropriate level of transactional aggregation

- Reducing overall size and complexity of joins

- Blending multiple large data sets with conformed dimensions

The Challenge of Creating Business-Ready Data

While cloud based ELT has enhanced the agility of data teams, the proliferation of data pipelines still poses a challenge. Even with careful implementation of shareable code repositories and standards, the reliance on a team of data engineers to satisfy the needs of data consumers can become challenging. In particular, it’s hard for data engineers to build business-ready data sets that are ready for analysis without further manipulation.

Building on the common data engineering transforms mentioned above, creating business ready data may include:

- Building a business-oriented view of data for data consumers.

- Creating a fully-vetted definition for each metric. They will then serve as a single source of truth for key business metrics like “revenue.”

- Establishing controlled, conformed dimensions. This leaves no chance of misrepresenting an important concept like a fiscal quarter or sales territory.

- Making blended data sets by augmenting historical data with third-party data or AI-generated predicted data sets.

Business-ready data provides data consumers with specific, rich data that can be directly used to answer their questions. It includes the right context, format, naming, and other clarifying factors to enable business users to work with data assets directly. But, it can’t be put into motion by the analytics or engineering teams alone. That would just slow down the process — and still make it difficult for data to reflect the exact business moment at the right time. Instead, this transformation process has to be flexible and agile. The best way to do this is by giving the responsibility of translating analytics-ready data into business-ready data to the users themselves — the people who live and breathe the changing condition of the business on a daily basis.

How a Data Mesh Approach and Business-Ready Data Work Together

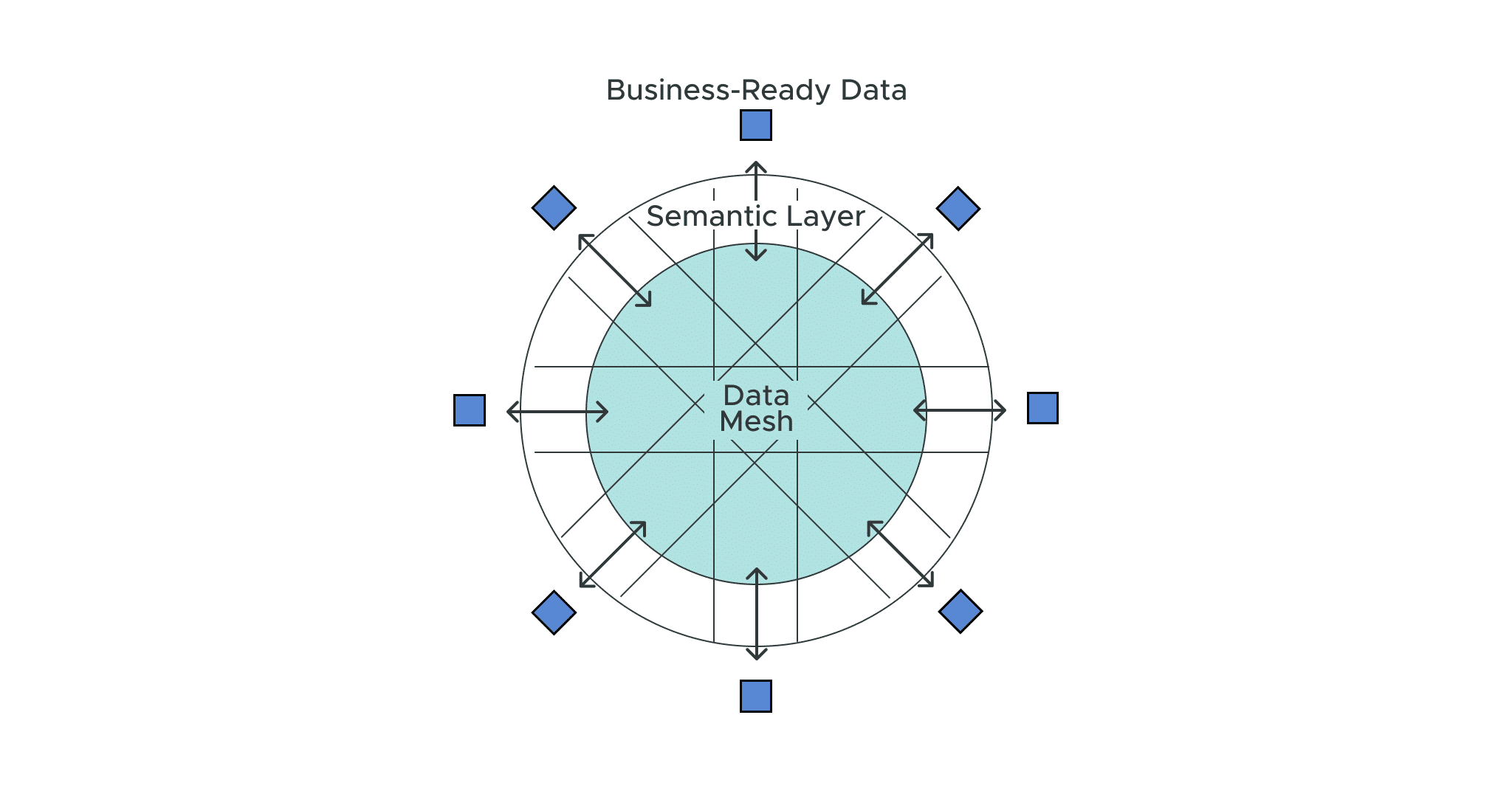

But, empowering so many users to translate analytics-ready data into business-ready data, in a scalable fashion, can be complex. This is where the concept of a data mesh comes into play. The data mesh approach facilitates a decentralized analytics architecture where business domains are responsible for their data. A big part of this approach revolves around creating data as a product, owned and designed by business units. Ideally, distributed teams directly interact with data assets to build their own data products — BI assets like dashboards, ad hoc assets like a pivot table or excel model, or AI assets managed in a notebook. But this autonomy also has to come with some form of central standardization. So, a successful data mesh practice requires a balance between centralized governance and autonomy.

The Value of Managing Business-Ready Data in a Semantic Layer

How do organizations produce business-ready data like this — somehow balancing this need for agility and autonomy with governance? This answer is a semantic layer.

A semantic layer empowers business analysts to uphold organization-wide governance by defining metrics such as revenue, and inventory with conformed dimensions such as fiscal year, product and customer. Then, the data consumers can use this pre-established framework to respond to each business moment as needed. The semantic layer then enables teams to easily create data products. Once the business-ready data is available in the semantic layer platform, it can immediately be consumed by the analytics and AI consumers via the BI tool of their choice.

Learn more about how the semantic layer delivers curated business-ready data to broader audiences, ideal for facilitating data mesh success. Check out the next blog post in our data mesh series, or get a refresher on the definition of data mesh in our first post, “The Definition of Data Mesh: What Is It and Why Do I Need One?”, or read the entire data mesh series in the white paper, “The Principles of Data Mesh and How a Semantic Layer Brings Data Mesh to Life“.

SHARE

Case Study: Vodafone Portugal Modernizes Data Analytics