A Semantic Layer is an incredibly powerful piece of technology for any data-driven organization. At AtScale, our goal is to ensure that all companies, large and small, can use the best-in-class semantic layer. This is the basis for our consumption-based pricing model, which is why companies can get started with AtScale for as little as $2,500 per month.

The Foundation of a Semantic Layer – The Semantic Model

AtScale’s pricing is consumption-based, meaning customers pay for what they consume. Our customers turn to our universal semantic layer because of its incredibly powerful multi-dimensional query engine which let’s any business user live-query their data in the cloud through BI tools like Power BI using its native DAX, Excel using its native MDX, Tableau, Looker, and others using SQL and custom applications using a RESTful API as well as through LLM powered chat interfaces (coming very soon).

In layman’s terms, any business user can slice and dice their data to any granularity on any data size by any dimensionality, including time, geography, product type, category and more. Finance teams are able to easily generate demand forecasts, insurance brokers can more profitably price premiums, investment banks can more quickly react to fund performance by asset type, retailers are able to more intelligently stock shelf inventory by regional preferences and times of year and manufacturers can better optimize their supply chains by part and process. To learn more about customer case studies with our universal semantic layer, check them out here.

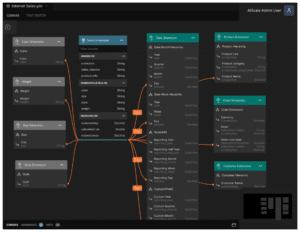

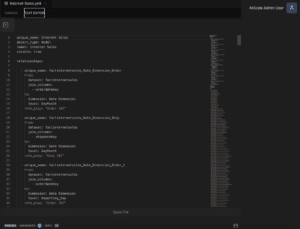

The first thing a customer does with AtScale is build a semantic model either using code or with our visual interface. They define their measures, calculations, and hierarchies. Think… “How does our company define revenue, profit, sales tax, geography (country > state/province > city > zip code)?” These data definitions are what analysts, data scientists, and executives use to drive the KPIs or predictive analysis they need for their decision-making.

We call these data definitions “Semantic Objects”.

AtScale’s consumption billing metric is: the number of semantic objects a customer deploys, metered monthly.

We do not bill for data size, number of users or number of queries. Bring the biggest data set you have in the cloud. Have every employee, customer or supplier query the AtScale semantic model using any query interface they want.

We do not bill for data size, users or queries.

Deployed Semantic Objects

For those readers that might have skipped ahead to this section, I’ll repeat, AtScale’s consumption billing metric is based on the number of semantic objects a customer deploys, metered monthly.

We use the term “deployed” semantic objects (DSO) to indicate the difference between a semantic object that’s been defined in a semantic model and either available to be used by a consumer in their analytics application or not available to be used by a consumer in their analytics application. Because we use Git as our semantic model version control, we use the term “deployed” to denote when the semantic objects have been made available, whether it’s to a small cohort of analysts for testing, the company’s employee base, or any amount of analytics consumers in between.

AtScale only bills for objects that are visible to an end user in the analytics application. For example, if the semantic object shows up in Tableau, Power BI, Excel or Looker to be dragged onto the reporting canvas or queried through a pivot table interface or leveraging an LLM, that is considered a “deployed semantic object”. If an analytics engineer is building out additional semantic objects but those objects are not ready to be tested or used for “production” use cases, we do not bill for those semantic objects.

What am I Committing to When Purchasing Deployed Semantic Objects?

Similar to other enterprise software companies in the data and analytics space, customers commit to a certain number of deployed semantic objects that they will consume within the term of their contract.

Once we have an estimate for the number of semantic objects, we multiply it by the number of months in the contract term (e.g. 12 months, 24 months, 36 months and so on).

AtScale bills for deployed semantic objects and we meter those objects monthly.

The number of semantic objects multiplied by the duration of the contract term equals the number of deployed semantic objects the customer will commit to consuming.

As an example, we’ve found that the average semantic model is roughly 250 semantic objects. If a customer wants to sign a 12-month contract based on a 250-semantic object model, the total number of DSO they will commit to consuming during their 12-month term is 3,000.

250 DSO per month * 12 months = 3,000 DSO to be consumed at any point during the 12-month contract term

Estimating the Number of Deployed Semantic Objects

There are two questions we consider with customers when estimating DSO:

- Is the data supporting the AtScale semantic model landed in the customer’s cloud data platform (Snowflake, Databricks, Google BigQuery, etc.)?

- Is the semantic model the customer wants to build being built from scratch or based on an existing semantic model from a BI tool like Power BI or an existing cube like SQL Server Analysis Services?

When estimating DSO, we try to focus on workloads that have already landed in a cloud data platform as they are ready to be activated by a semantic model. As customers prioritize their first semantic model we suggest starting with one where the data is already in the cloud.

The next step is to understand the current or greenfield state of the semantic model the customer wants to build. For existing data models like Power BI semantic models, DBT metrics models, SQL Server Analysis Services Cubes or LookML models we have tools to help you estimate the number of semantic objects that comprise the existing semantic model. If it’s greenfield, we can help you think through the use case and provide sizing benchmarks based on similar models built by other customers. Again, we’ve found that the average semantic model is roughly ~250 semantic objects.

We’re very aware that a new customer will not be deploying their estimated monthly DSO in month one. We work with our customers to understand their ability to ramp up the deployment of their DSO to better estimate the true amount of DSO a customer should consider committing to in their first contract.

Volume Based Discounting

Volume based discounting is built into the AtScale pricing model. This means that the more objects a customer commits to consuming during the term of their contract, the cheaper the price per DSO. We have customers that consume in the low hundreds of DSO per month up to the tens and hundreds of thousands. Customers who are only consuming in the low hundreds of DSO per month pay more on a price-per-object basis than our customers who are consuming in the tens to hundreds of thousands of deployed semantic objects.

Getting Started

There are several ways to get started with AtScale. Developers looking to get their hands on AtScale to build their own semantic models can download our FREE Developer Edition. For organizations that want to understand if AtScale supports their semantic layer initiatives, request a free trial. Your account representative will reach out to help you evaluate if AtScale is the right fit to achieve your business objectives.

For any other pricing questions, please reach out to us through our pricing request page.

SHARE

AtScale Developer Community Edition